Flexible deployment has always been a mainstay of Corticon. With a few easy steps, you can easily deploy Corticon on Docker.

Docker is a very popular platform to build, share and run an application with all its dependencies. Since the container packages up the applications with all the libraries and dependencies, you can rest assured that the application can run on any Docker host regardless of the environment. Docker containers are faster to create and quicker to start. It is very efficient to scale if demand for your application requires them.

Corticon Server can be deployed to Docker as a web application. Once Corticon is deployed, you can access it as a REST or a SOAP service.

Deploying Corticon Server to Docker is done in 3 easy steps:

- First, you create a docker file with your Corticon configuration.

- Second, you build the docker container from the docker file.

- Third, you run your docker container.

Now, lets go through the various steps in more detail.

Creating your Docker File with Corticon Configuration

A docker file to deploy Corticon Server on Tomcat is now available on ESD. This makes it easy for you to deploy your Corticon rules as a service to your docker container. The bundled docker file has the configuration needed to deploy Corticon Server to a base Tomcat image (9.0).

Bundled Docker File

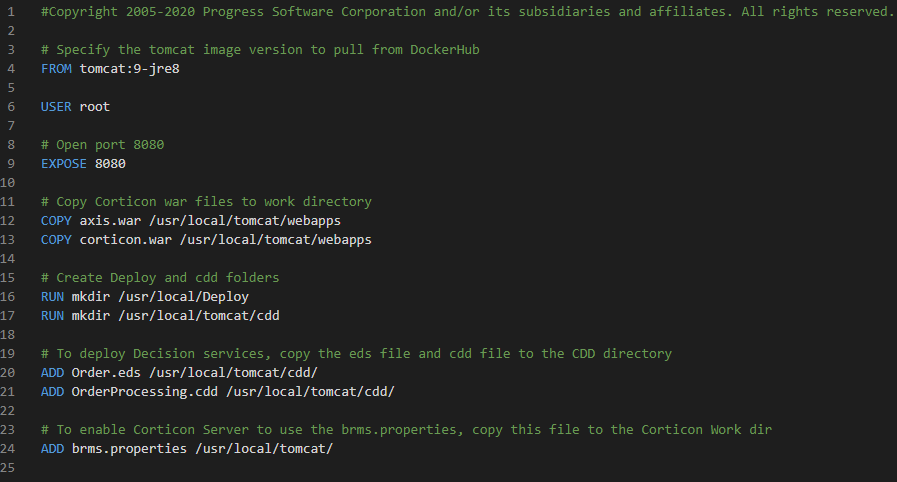

Let’s review the contents of the bundled docker file.

A docker file always begins with the FROM statement. It specifies the base image to be used for this container. The docker file bundled uses a public tomcat-9jre8 image from dockerhub. You can also choose any other supported image from docker hub or build your own.

Let’s say you want to use tomcat9.0.53 with a different version of jre. You can do that by changing the From statement to use a different image.

E.g. FROM tomcat:9.0.53-jre11

This will derive the 9.0.53 image of Tomcat with jre 11 version.

You then need to set the USER root which enables the container to have full control of the host system.

Then using EXPOSE, you tell docker that the container listens on the stated network port during runtime. If you want the port to be different, you can change it to a different port number of your liking.

The next steps are to deploy Corticon web-apps to the Tomcat Server. This is done by copying over axis.war and corticon.war to the Tomcat web-apps directory.

If you want to deploy decision services using cdd, lines 16-21 shows you how to do that. In the bundled docker file it is deploying OrderProcessing.eds, but you can deploy any decision service of your choosing. You first have to create the directories ‘Deploy’ and ‘cdd’ and then copy over your. eds and .cdd files to the cdd directory. The cdd directory is created in the Corticon Work Directory. In this scenario, the Corticon Work Directory is /usr/local/tomcat. This will ensure that the decision service is deployed once the Corticon server is up and running.

For the Corticon server to use your brms.properties file, you should copy the brms.properties file to the Corticon Work Directory. In this case to the Corticon Work Directory which is /usr/local/tomcat.

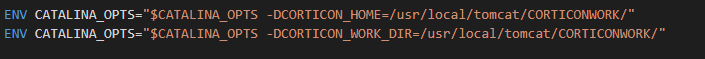

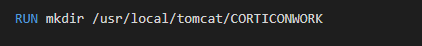

Setting Corticon Home and Work Directory

You can set Corticon Home & Corticon Work directory to point to a specific location in the docker file. This can be done using the following commands:

If the directory is missing, make sure to create the directory first.

Cdd deployment and using of the brms.properties can follow by copying over the files to the appropriate location.

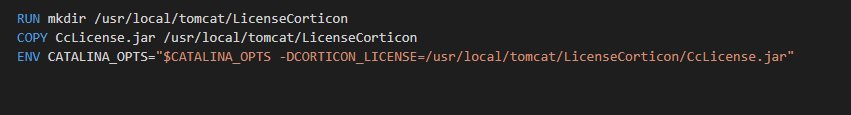

Updating Corticon License

To change/update the license for the Server you need to copy the license to the container and then update the environment variable.

This can be done using the commands below:

This is creating a directory “LicenseCorticon” in tomcat. It then copies over the CcLicense.jar to the created folder. You then need to set the environment variable—DCORTICON_LICENSE, which Tomcat then forwards to the Corticon Server.

This covers all the configurations needed in the docker file. Next, you need to build the docker image.

Build your Docker image

To build your docker image, run the docker build command as shown below.

The -t is to name and create a tag for the image.

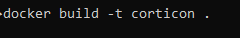

This will build a docker container using the Docker file at location “.” and tag it corticon.

For more documentation and options supported with docker build command, you can reference the docker documentation.

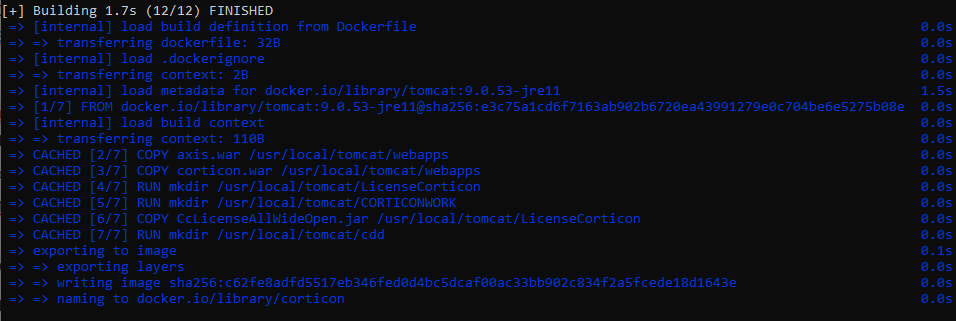

Running the command will create the docker image with the configurations specified in the docker file.

Now, the last step is to run the docker container.

Running Docker container

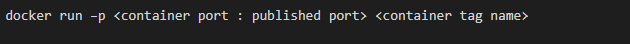

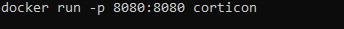

Now, you are ready to run the docker container. This can be done by using the Docker run command.

For example:

-p binds the 8080 port of the container to the TCP 8080 port of the host machine. Running this command will start your docker container.

Docker has more supported options for run which can be found here.

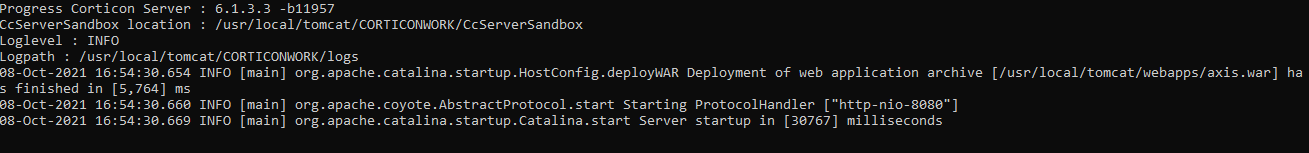

If Corticon has been deployed correctly to the Tomcat, you should see the Corticon server version in the Tomcat console output. For example:

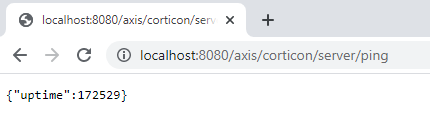

You can also check if the Corticon server has started correctly by accessing the Corticon ping REST end point on the browser (GET request).

http://<ip:port>/axis/corticon/server/ping

If the server is up and running, the REST/GET request should return a JSON with uptime as shown.

{“uptime” : <time in milliseconds>}

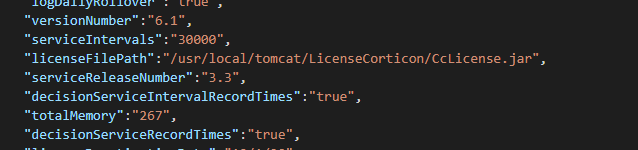

To check if the license has been updated properly, you can check the server properties using the REST end point.

http://<ip:port>/axis/corticon/server/getProperties

The REST response returns a JSON with the Server properties. If the license has been updated properly, the licenseFilePath attribute in the JSON should have the updated path.

"licenseFilePath": "/usr/local/tomcat/LicenseCorticon/CcLicense.jar".

You can also use the WebConsole to view the Server details to check if the proper license has been applied to the Corticon Server.

These are all the steps needed to have docker successfully deployed to a Tomcat server image. As you have noticed, we talked about the docker configurations specific to Tomcat. Corticon can also be deployed to other supported application servers. To deploy Corticon to the different application servers, please refer to the Corticon documentation on settings to deploy Corticon to a specific application server.

The configurations specific to deploying a web-app to a different image can differ based on the requirements of the image. However, the Corticon-specific configurations like setting the CORTICON_HOME and CORTICON_WORK_DIR or updating the license stay the same. You still must pass the environment variables like -DCORTICON_LICENSE to update the license or -DCORTICON_HOME to use a different location for Corticon home.

Conclusion

As you can see, it is very simple to deploy Corticon on docker. The docker file can be customized to any extend to fit your business needs. Also, Corticon can be deployed to any supported OS/application Server docker images. Its possible different docker images might need different configurations/ customizations to deploy the web application to the container image.

Suvasri Mandal

Suvasri Mandal is a Principal Software Engineer at Progress. She is responsible for design, development, testing and support of Corticon BRMS. She has background in the areas of Business Rules and Complex Event Processing.

Next:

Comments

Topics

- Application Development

- Mobility

- Digital Experience

- Company and Community

- Data Platform

- Security and Compliance

- Infrastructure Management

Sitefinity Training and Certification Now Available.

Let our experts teach you how to use Sitefinity's best-in-class features to deliver compelling digital experiences.

Learn MoreMore From Progress

Latest Stories

in Your Inbox

Subscribe to get all the news, info and tutorials you need to build better business apps and sites

Progress collects the Personal Information set out in our Privacy Policy and the Supplemental Privacy notice for residents of California and other US States and uses it for the purposes stated in that policy.

You can also ask us not to share your Personal Information to third parties here: Do Not Sell or Share My Info

We see that you have already chosen to receive marketing materials from us. If you wish to change this at any time you may do so by clicking here.

Thank you for your continued interest in Progress. Based on either your previous activity on our websites or our ongoing relationship, we will keep you updated on our products, solutions, services, company news and events. If you decide that you want to be removed from our mailing lists at any time, you can change your contact preferences by clicking here.