Deploying Hybrid Data Pipeline on Microsoft Azure

Introduction to Hybrid Data Pipeline

Hybrid Data Pipeline is our innovative cloud connectivity solution designed to simplify and streamline data integration between cloud, mobile, and on-premises sources through a secure, firewall-friendly integration. With hybrid data pipeline, developers can build data-centric applications faster than ever, and manage them more easily. SaaS ISVs can drive new wins through integration with customers’ legacy applications and data. IT can immediately provide a plug-and-play solution that extends the reach of BI and ETL, RESTify any database to improve developer productivity, accelerates the delivery of exciting new services.

You can deploy Hybrid Data Pipeline on your servers anywhere in the world and with explosion in the use of cloud computing platforms like Azure, Heroku, AWS and any other cloud. We put together this tutorial to help you deploy Hybrid Data Pipeline on Microsoft Azure platform.

Setting up VM in Azure

- To get started, you would need an Azure account and if you don’t have one register here and login into the portal.

- Once you have logged into the portal, create a new Red Hat 6.8 VM. If you are unsure on how to create it, you can go through this official step-by-step documentation.

- After the VM is created, open the settings for public IP address of the VM. You can access these settings by going to All Resources (Rubik Cube icon) on your portal.

- Go the Configuration view in the Public IP address settings for the VM and set the DNS Name label for the VM. It will be in the format of xxxxx.region.cloudapp.azure.com

Installing Hybrid Data Pipeline on Azure

- Download Hybrid Data Pipeline.

- If you are on Linux, use sftp command to copy the installer from your local machine to the VM in azure by using the following commands.

sftp <LogonName>@<AzureVM-ipaddress/DNS>put /path/to/PROGRESS_DATADIRECT_HDP_SERVER_LINUX_64_INSTALL.bin/ - If you are on Windows, you can use open source applications like WinSCP to copy your installer to Azure VM.

- Once the installer transfer is complete, you can now use SSH to connect to Azure VM that you have created.

- If you are on Linux, use the following command to login:

sftp <LogonName>@<AzureVM-ipaddress/DNS> - If you are on windows, use putty to SSH in to your Azure VM

- If you are on Linux, use the following command to login:

- Once you have logged in, you should be able to find the installer that you have copied from your local machine in the home folder of the user.

- If the installer package is not executable, run the following command to make the package executable.

chmod +x PROGRESS_DATADIRECT_HDP_SERVER_LINUX_64_INSTALL.bin - To start the installation, run the following command which will start the installer in console mode

./PROGRESS_DATADIRECT_HDP_SERVER_LINUX_64_INSTALL.bin - During the installation,

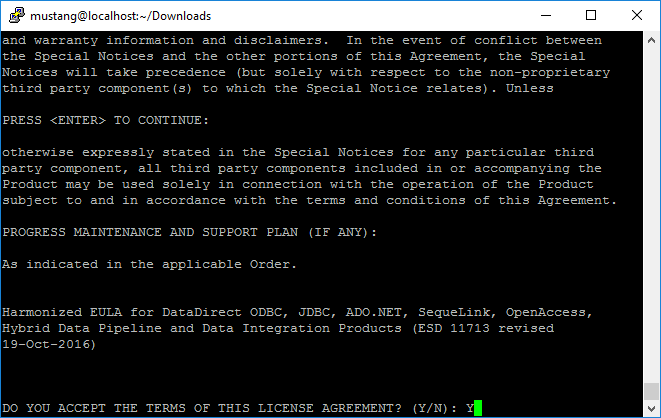

- Make sure you read and understand the License agreement and accept the License agreement to continue the installation.

Fig: Hybrid Data Pipeline - License Agreement

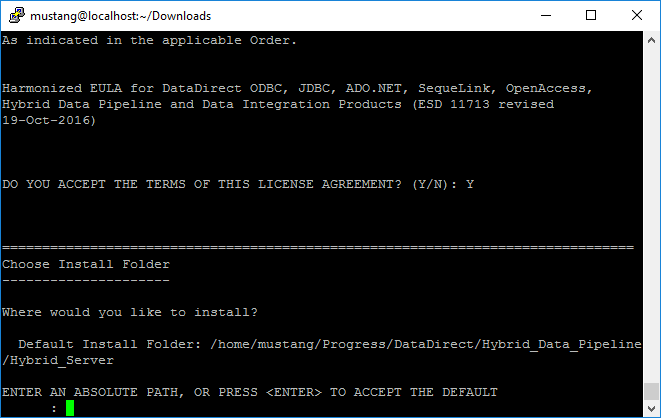

- By default, the installation directory would be the following, but if you want to change it you are free to do so.

/home/users/<username>/Progress/DataDirect/Hybrid_Data_Pipeline/Hybrid_Server

Fig: Hybrid Data Pipeline – Install Folder setting

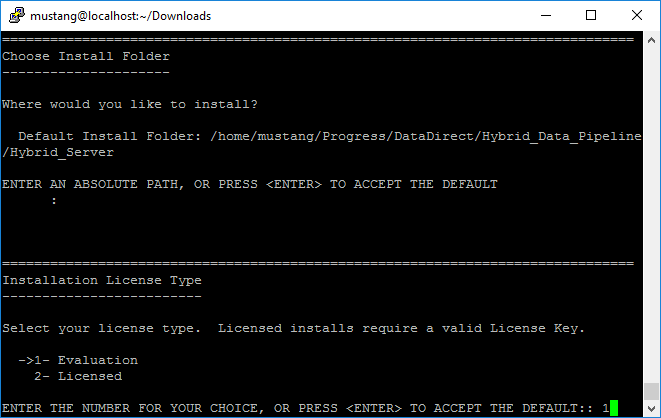

- Choose the type of installation when prompted for. If you are trying the Hybrid Data Pipeline, choose Evaluation as your option. If you have purchased a license, choose Licensed installation and enter your license key number to proceed further.

Fig: Hybrid Data Pipeline – Installation License Type

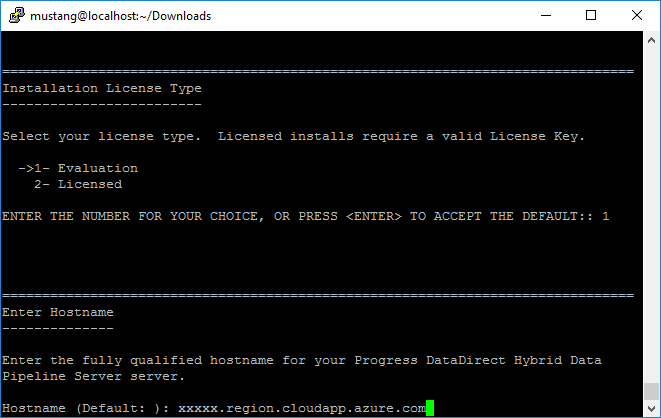

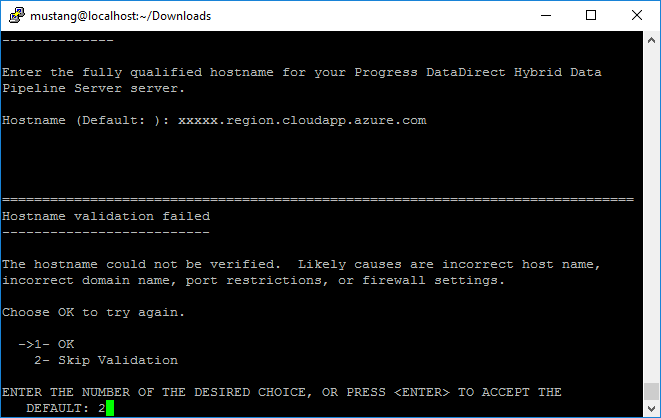

- When the installer asks you to enter the hostname for your server, enter the full domain name label that you have configured on Azure for the VM in the previous steps. It will be in the format of xxxxx.region.cloudapp.azure.com

Fig: Hybrid Data Pipeline – Hostname configuration

- The installer tries to validate hostname, but it will fail. Ignore the validation and proceed ahead with the installation.

Fig: Hybrid Data Pipeline – Hostname validation

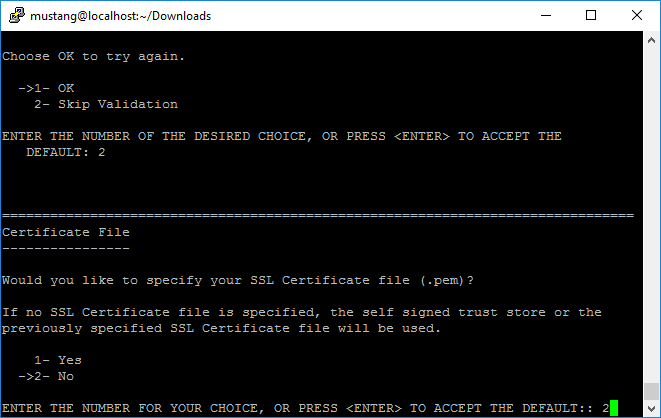

- When installer prompts for a SSL certificate File, select No to use the self-signed trust store that is included with the installation. If you have an SSL Certificate file that you want to use, you can provide path to that instead of using the certificate that comes with installer, by selecting Yes.

Fig: Hybrid Data Pipeline – SSL Certificate configuration

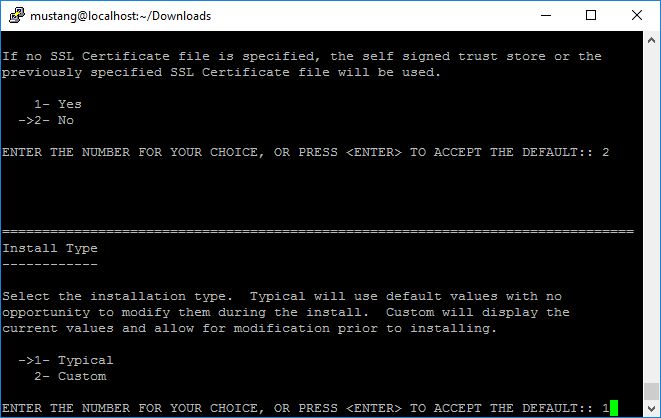

- To use default settings, you can choose Typical installation (1), but if you want to configure the installation with your own settings, choose custom installation (2).

Fig: Hybrid Data Pipeline – Installation Type

- Next, you should see Ready to Install information with all the configurations that you have made. Press ENTER to install Hybrid Data Pipeline with those settings.

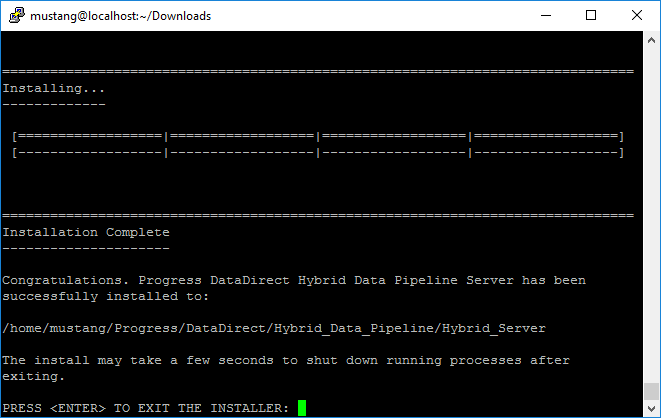

- After the installation is complete, you will see an Install Complete message. To exit the installer, press ENTER.

Fig: Hybrid Data Pipeline – Installation Complete

- Make sure you read and understand the License agreement and accept the License agreement to continue the installation.

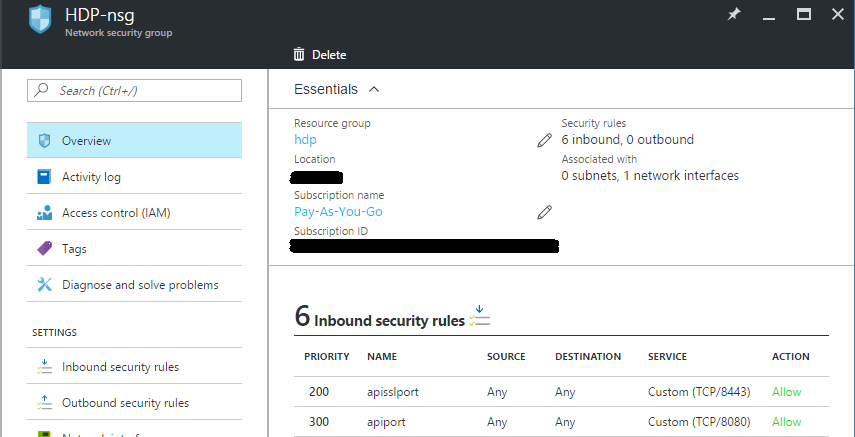

- Before starting the Hybrid Data Pipeline server, we should configure the VM firewall and Azure network inbound security rules to accept connections on 8080/8443 ports that the server uses for providing the services. Note that 8080 is for HTTP and 8443 is for HTTPS

- To configure the firewall on Redhat 6.8 to accept connections on 8080, 8443 ports, run the following commands

sudo iptables -I INPUT -p tcp -m tcp --dport 8080 -j ACCEPTsudo iptables -I INPUT -p tcp -m tcp --dport 8443 -j ACCEPTsudo service iptables save - Now go to Azure portal and under All resources go to <VMName>-nsg, click on inbound security rules tab to reveal inbound rules configuration.

- Click on Add and you should see a new form where you can configure a new rule. Below is an example of how you can add a rule for 8443 port.

Name: <Desired name for the rule>

Priority: <Priority you want to assign> (Lower the number, Higher the priority)

Source: Any

Service: Custom

Protocol: TCP

Port range: 8443

Action: Allow

Fig: Inbound Security rules configuration on Azure

- In a similar way, you can also configure a security rule for 8080 port, if you want to allow HTTP connections.

- Now test if the ports are open from a local machine by opening your terminal and running the command. If you are not able to connect, please make sure that you have properly configured the firewall and Azure security rules by going through steps 10 to 14.

telnet xxxxx.region.cloudapp.azure.com 8443 - Restart the Azure VM using the following command:

sudo shutdown -r now

Starting Hybrid Data Pipeline Server

- Now that we have installed and configured everything for Hybrid Data Pipeline to run properly, it’s time to start the server.

- Login on to Azure VM through SSH and run the following commands to start Hybrid Data Pipeline server

cd /path/to/ Progress/DataDirect/Hybrid_Data_Pipeline/Hybrid_Server/ddcloud/

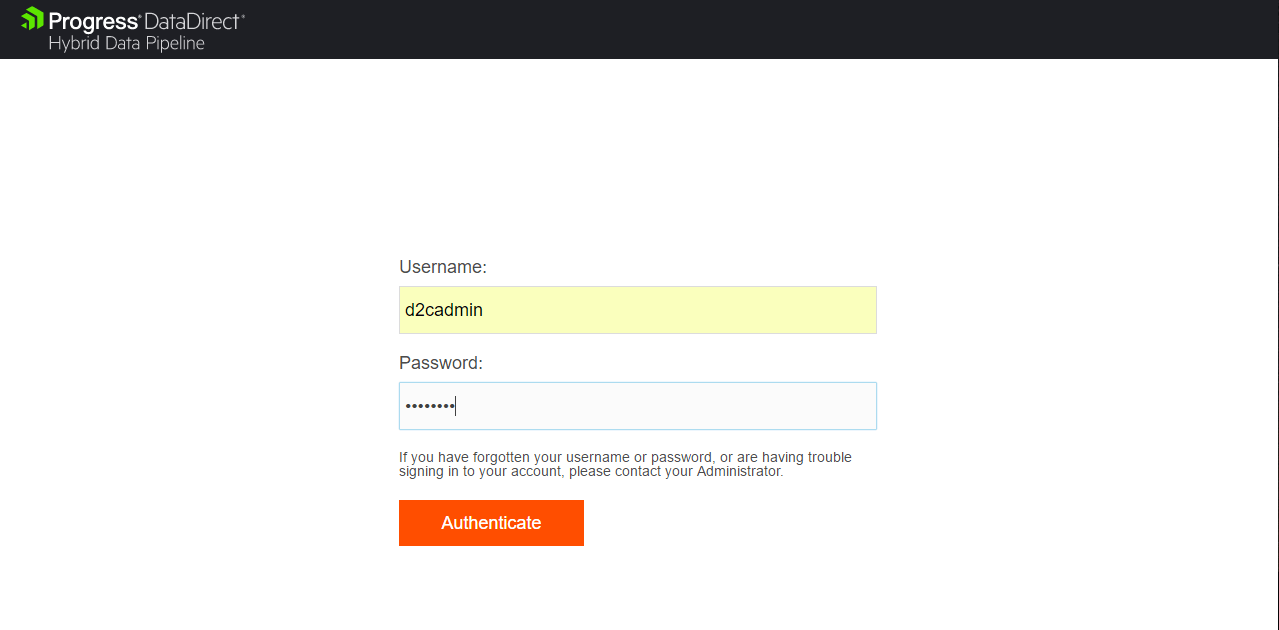

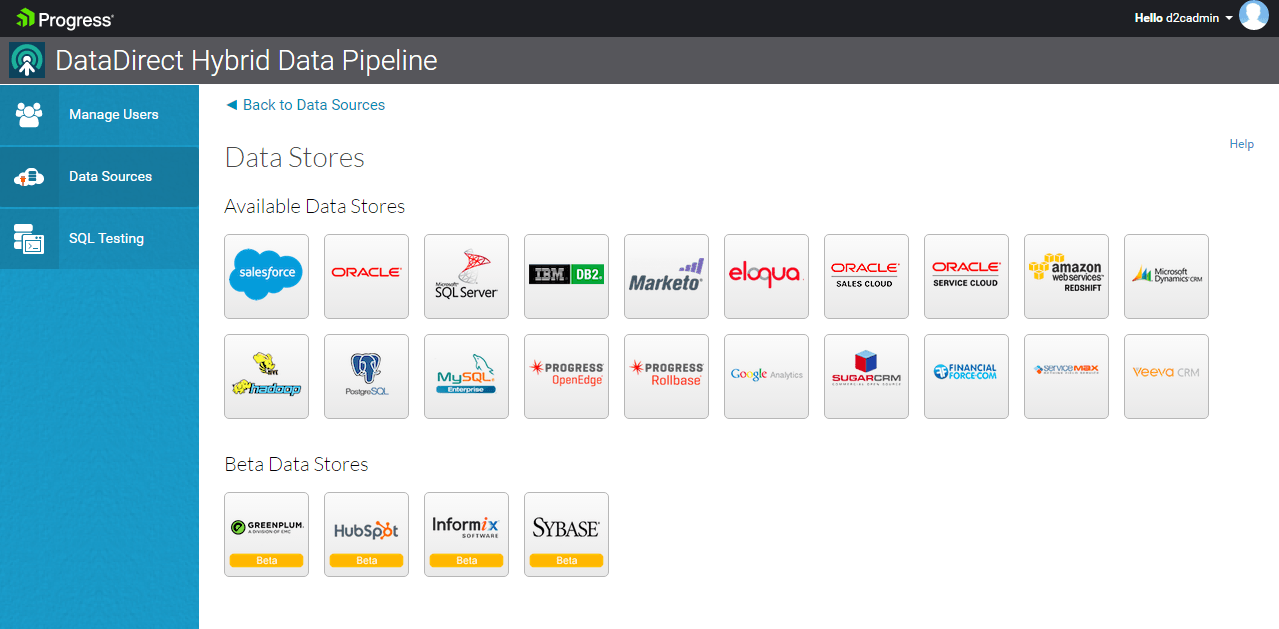

./start.sh - Open your browser and browse to https://xxxxx.region.cloudapp.azure.com:8443, which opens the Hybrid Data Pipeline Login screen. Use d2cadmin/d2cadmin as username and password to login into Hybrid Data Pipeline Dashboard. Following are couple of screenshots of Hybrid Data Pipeline for your reference.

Fig: Login Screen for Progress DataDirect Hybrid Data Pipeline

Fig: Data Stores supported in Hybrid Data Pipeline

Congratulations,

Now that you have successfully deployed the world’s advanced Hybrid data access solution, feel free to configure your data sources in Hybrid Data pipeline, and integrate the data in your applications using the Hybrid Data Pipeline’s standards based ODBC, JDBC connectivity or using its REST API, one of the most advanced OData standard’s API. Note that trial is valid for 90 days, and you will have complete access to use any of the data stores. To learn more about Progress DataDirect Hybrid Data Pipeline, you can visit this page or watch this short video overview.